This integration is for the Respan gateway.

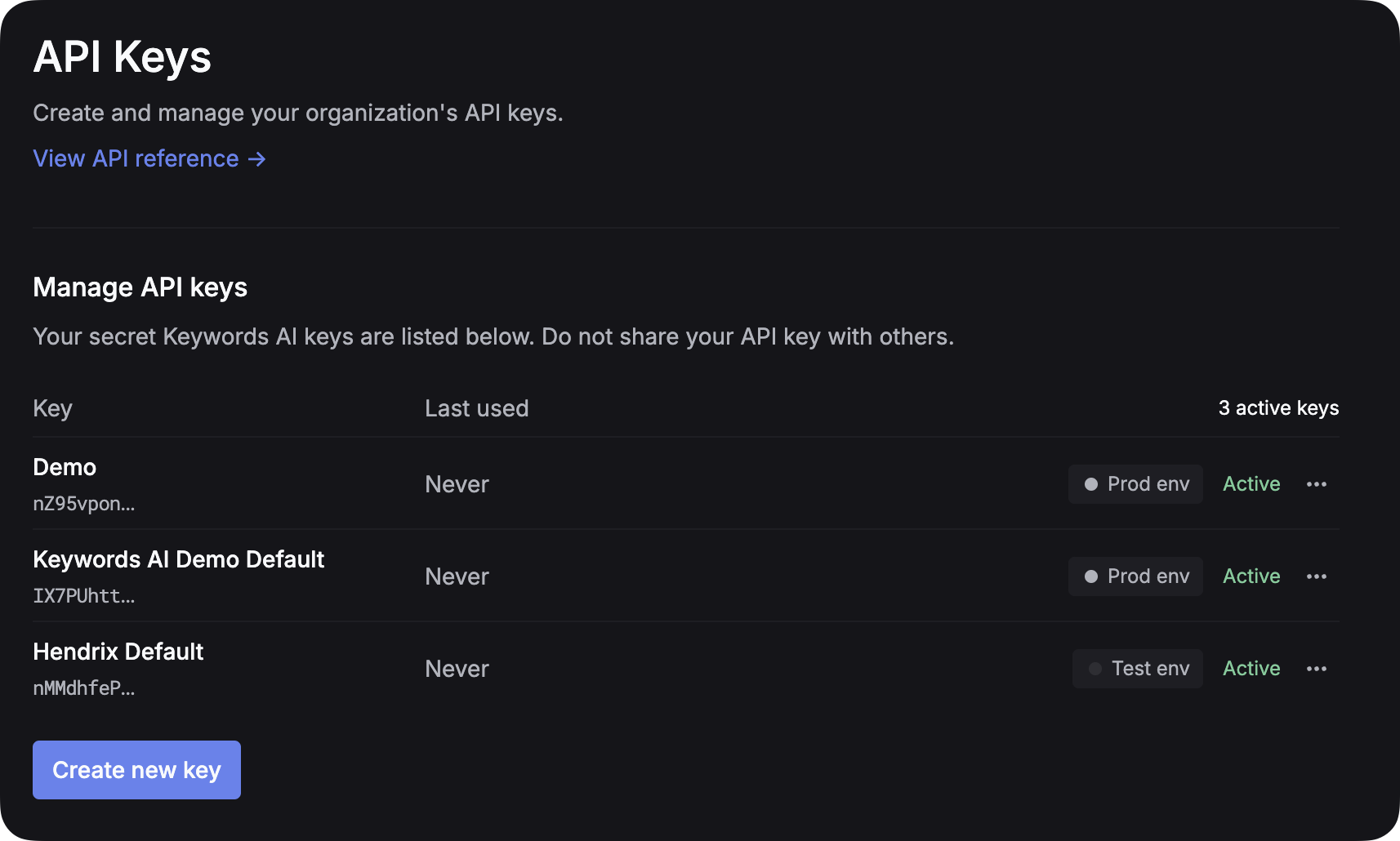

1. Get Respan API key

After you create an account on Respan, you can get your API key from the API keys page.

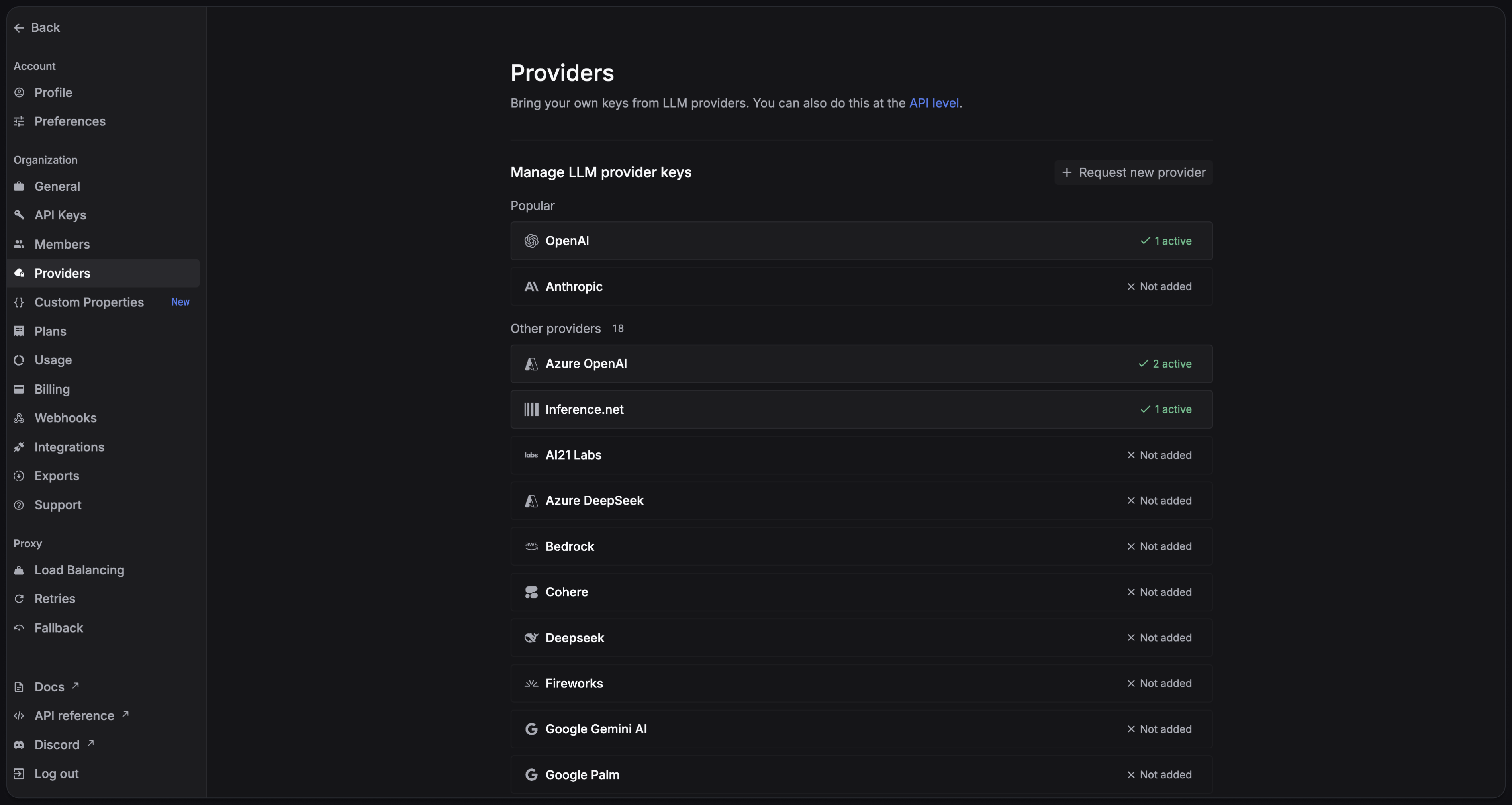

2. Add provider credentials

You have to add your own credentials to activate AI gateway otherwise your LLM calls will cause errors. We will use your credentials to call LLMs on your behalf. We won’t use your credentials for any other purposes and no extra charges will be applied. Go to the Providers page to add your credentials.

3. Compatibility at a glance

| SDK helper | Works via Respan? | Switch models? |

|---|---|---|

@ai-sdk/openai | ✅ Yes | ✅ Yes |

@ai-sdk/anthropic | ✅ Yes (Anthropic models only) | ❌ No |

@ai-sdk/google | ✅ Yes | ✅ Yes |

4. Integrate Respan gateway

- OpenAI

- Anthropic

- Google Gemini

Here’s an example of how to use the Vercel AI SDK with OpenAI:Here is an example of using the Vercel AI SDK with response API.You can find the full source code for this example on GitHub: TypeScript Example

TypeScript

5. Add Respan parameters

Adding Respan parameters to the Vercel AI SDK is different than other frameworks. Here is an example of how to do it:Specify Respan params in an object

You should create an object with the Respan parameters you want to use. Add parameters you want to use as keys in the object.

Encode the object as a string

You should encode the object as a string and then you can send it as a header in your request.