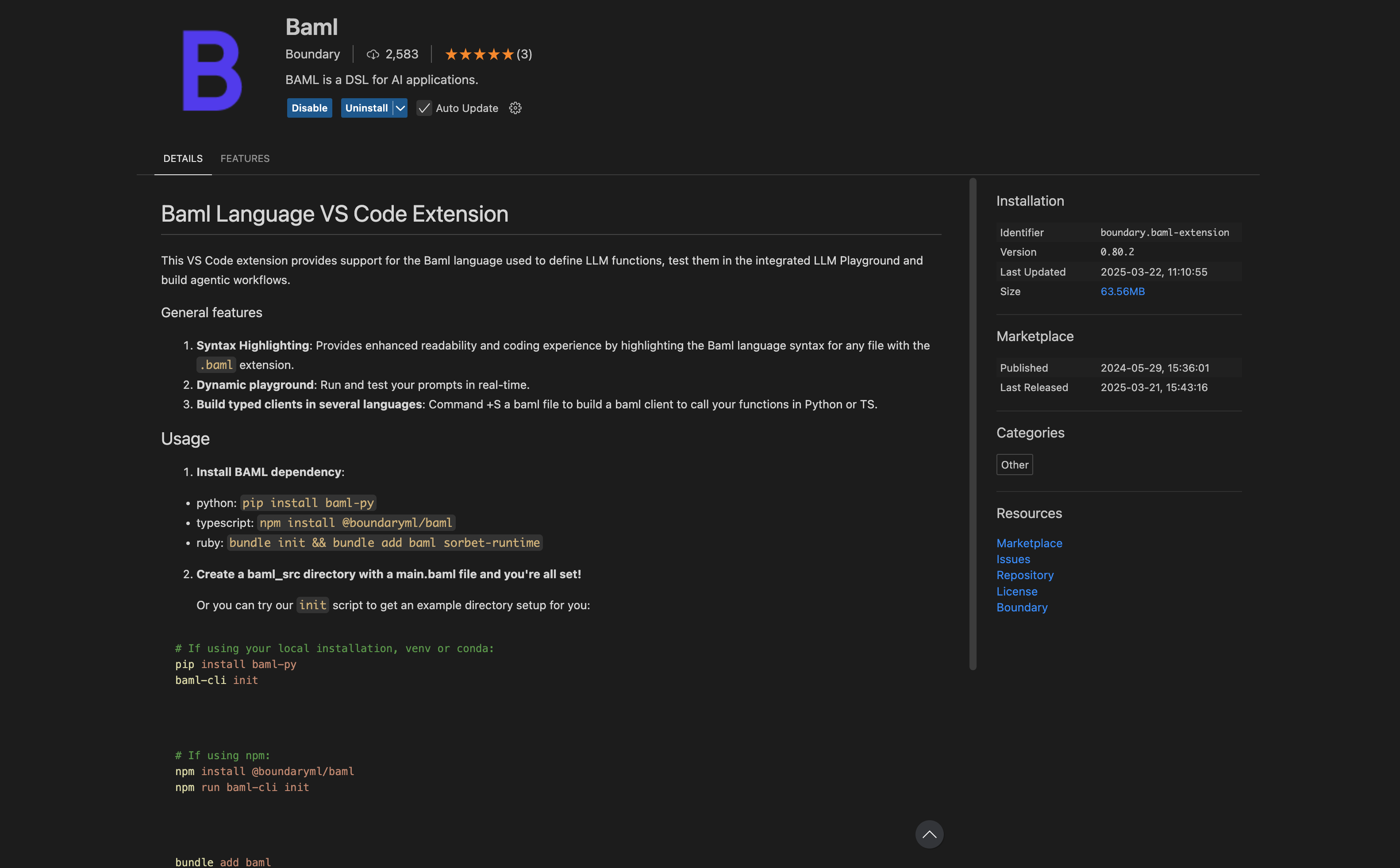

Install extension

Install BAML extension in VS code. create a.baml file (e.g., test.baml) and get started!

Integration methods

There are 2 ways to integrate Respan with BAML and allow you send Respan params and check them in Respan platform:- Send Respan params through header.

- End at low level and send params to Respan through the client.

Send Respan params through header

Set up a BAML Client Registry and Respan Integration First, you need to set up a client registry in BAML. This registry manages your LLM clients.Python

Python

- Track and monitor all LLM requests

- Analyze performance and usage patterns

- Associate custom identifiers with your LLM calls

Using Respan client in BAML

Initialize a Respan client in BAML Initialize a Respan client in BAML by switching the base URL and adding the RESPAN_API_KEY in the environmental variable. Learn how to create a Respan API key here.BAML

BAML

BAML

Test & monitor

After you have the code,cmd+shift+p > BAML: Open in Playground and run your tests. You can also monitor your LLM performance in the Respan dashboard. Sign up here: https://www.respan.ai